Summary

This case study describes how EnFuzion was used in addressing a high energy physics data analysis problem. The objective was to search for rare events of unknown (and probably very low) production rate in a sample containing mostly background events. EnFuzion accelerated this computational experiment.

Axceleon would like to thank Dr. Igor Mandic from Institute Jozef Stefan for sharing his use of EnFuzion in high energy physics.

What is the high energy physics data analysis problem?

The analysis problem consists of a search for rare events of unknown (and probably very low) production rate in a sample containing mostly background events. The background is well reproduced using Monte Carlo simulation data. The researchers also have Monte Carlo data of the signal events, which are the events for which the researcher were searching.

For separation of event categories, the researchers use vertex fit probability, probabilities of two different constraint fits, and particle identification. The particle identification provides the possibility of choosing the purity of identification. Higher purity means lower efficiency. Similarly, the distributions of vertex and constraint fit probabilities are different for signal and background events. The researchers can also vary the values of the probabilities that are required for accepting an event, which changes the signal and background efficiencies.

The problem in this kind of analysis is to choose the actual values at which one cuts because the separation quantities are correlated. In this case, the researchers wanted to maximize the statistical significance of a potential signal. This significance is described by the ratio and are signal and background efficiencies, respectively.

How did EnFuzion help solve the problem?

EnFuzion accelerated this computational experiment greatly.

How was EnFuzion used?

Preparing Input Parameters

The researchers have to find a maximum of S as a function of four cuts. The most straightforward and clear way to find a maximum is to vary the cuts in steps and choose the best combination. Unfortunately, this method of finding a maximum requires a lot of CPU time. To save time, they first produce data files with a minimum information. For each event, they write out only the values of the four separating quantities. They can eliminate certain regions of possible cuts by inspecting the graphical representation of distributions. They set the steps of the cut variation by eye.

A program that calculated S for five values of particle identification purity needs about two minutes of real time on an HP735 computer. In this case, vertex fit probability and two constraint fit probabilities were input parameters.

The values of cuts that they tested are

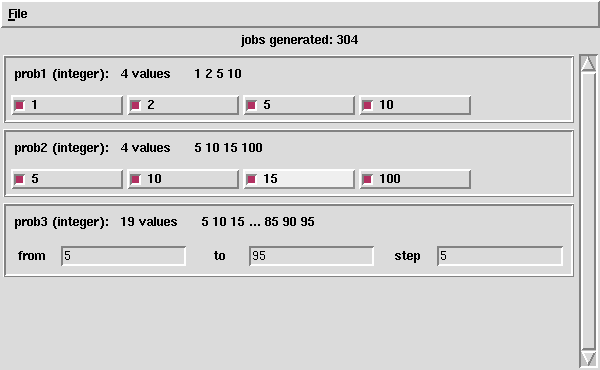

prob1 less than 1, 2, 5 and 10 percent prob2 less than 5, 10, 15 and 100 percent prob3 greater than from 5 to 95 percent, step 5 percent.

Performing the Computation

The job was run using EnFuzion on a Hewlett Packard cluster consisting of one HPC160 (PA 8000), one HP735, and five HP715 computers running HPUX 10.20b. The following EnFuzion plan file was used:

#

# specify the parameters

#

parameter prob1 integer select anyof 1 2 5 10 default 1 2 5 10;

parameter prob2 integer select anyof 5 10 15 100 default 5 10 15 100;

parameter prob3 integer range from 5 to 95 step 5;

#

# specify files required for the calculations

#

task nodestart

copy signal.ndata node:.

copy background.data node:.

endtask

#

# specify the task to be executed

#

task main

#

# initial commands

#

node:execute echo $prob1 ftn81

node:execute echo $prob2 ftn81

node:execute echo $prob3 ftn81

node:execute ln -sf signal.ndata ftn87

node:execute ln SF background.data ftn88

#

# execute the real thing

#

node:execute nice time program.exe

#

# copy the result to the main machine

#

copy node:ftn82 dat/r$prob1$prob2$prob3.dat

endtask

#

# collect all the results in one file

#

task rootfinish

cat dat/* output.dat

endtask

This plan file generated the following user interface for job execution, which was used to select input parameters:

To calculate all possible combinations of input parameters, 304 jobs were generated. On average, each job required around 2.5 minutes on a single HP machine. The total elapsed time for the execution of 304 jobs, using seven HP machines was less than two hours. Here is a detailed report from EnFuzion:

Fri Feb 28 19:08:31 1997: ===== Run Finished ===== Fri Feb 28 19:08:31 1997: Timing Report: Fri Feb 28 19:08:31 1997: Total Time: 01:52:14 Fri Feb 28 19:08:31 1997: Starting Node Servers: 00:00:20 Fri Feb 28 19:08:31 1997: Initializing the Root: 00:00:00 Fri Feb 28 19:08:31 1997: Initializing the Nodes: 00:00:00 Fri Feb 28 19:08:31 1997: Running User Jobs: 01:51:54 Fri Feb 28 19:08:31 1997: Completing the Nodes: 00:00:00 Fri Feb 28 19:08:31 1997: Completing the Root: 00:00:00 Fri Feb 28 19:08:31 1997: Jobs Report: Fri Feb 28 19:08:31 1997: Total Number of Jobs Finished: 304 Fri Feb 28 19:08:31 1997: Total Number of Jobs Failed: 0 Fri Feb 28 19:08:31 1997: Node Report: Fri Feb 28 19:08:31 1997: Jobs Finished on Node "0" on Host "merlot": 9 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "1" on Host "pikolit": 95 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "2" on Host "barbera": 41 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "3" on Host "refosk": 38 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "4" on Host "traminec": 41 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "5" on Host "rulandec": 40 Fri Feb 28 19:08:31 1997: Jobs Finished on Node "6" on Host "silvanec": 40

Results

The researchers found that the selection does not depend significantly on values of the parameters, prob1 and prob2. Although it might be possible to predict this result from the probability distributions, they could not completely exclude dependencies because of possible correlations.

In the figure, the researchers show S as a function of prob3 and particle identification cut (for values of prob1 = 10 and prob2 = 15). One can observe the maximum of S. The position of the peak at the edge of the plot suggests that the particle identification cut should be extended to confirm that this is the true maximum.

Further Information

This case study describes some basic capabilities of EnFuzion. You can find out more about using EnFuzion from the EnFuzon User Manual.